Regression Model Development (part 1)#

Supervised algorithms use inputs (independent variables) and labeled outputs (dependent variable -the “answers”) to create a model that can measure its performance and learn over time. Splitting the data into independent and dependent variables, we have the following (again, this will be very similar to the previous example):

Train Model#

Recall, splitting the data into training and testing sets is not required, but it is good practice. Furthermore, it provides content for part D. As with the previous example, we’ll use scikit-learn aka sklearn built-ins for this.

import numpy as np

from sklearn.model_selection import train_test_split

#split the variable sets into training and testing subsets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.333, random_state=41)

| sepal-width | petal-length | petal-width | type | |

|---|---|---|---|---|

| 111 | 2.700000 | 5.300000 | 1.900000 | Iris-virginica |

| 82 | 2.700000 | 3.900000 | 1.200000 | Iris-versicolor |

| 130 | 2.800000 | 6.100000 | 1.900000 | Iris-virginica |

| 27 | 3.500000 | 1.500000 | 0.200000 | Iris-setosa |

| 33 | 4.200000 | 1.400000 | 0.200000 | Iris-setosa |

| sepal-length | |

|---|---|

| 111 | 6.400000 |

| 82 | 5.800000 |

| 130 | 7.400000 |

| 27 | 5.200000 |

| 33 | 5.500000 |

Review sklearn’s nice supervised learning library. Note that many of these models have both classification and regression extensions.

from sklearn.linear_model import LinearRegression

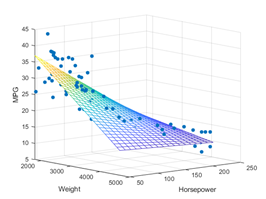

Our data is mostly quantitative and the scatterplots indicate some linear relations between variables. So linear regression isn’t a bad place to start. Once we’ve trained and tested a linear regression model, we’ll easily be able to experiment with different algorithms.

linear_reg_model = LinearRegression()

linear_reg_model.fit(X_train,y_train)

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[5], line 2

1 linear_reg_model = LinearRegression()

----> 2 linear_reg_model.fit(X_train,y_train)

File ~\.virtualenvs\jupyter-books-WZpnkDri\Lib\site-packages\sklearn\linear_model\_base.py:649, in LinearRegression.fit(self, X, y, sample_weight)

645 n_jobs_ = self.n_jobs

647 accept_sparse = False if self.positive else ["csr", "csc", "coo"]

--> 649 X, y = self._validate_data(

650 X, y, accept_sparse=accept_sparse, y_numeric=True, multi_output=True

651 )

653 sample_weight = _check_sample_weight(

654 sample_weight, X, dtype=X.dtype, only_non_negative=True

655 )

657 X, y, X_offset, y_offset, X_scale = _preprocess_data(

658 X,

659 y,

(...)

662 sample_weight=sample_weight,

663 )

File ~\.virtualenvs\jupyter-books-WZpnkDri\Lib\site-packages\sklearn\base.py:554, in BaseEstimator._validate_data(self, X, y, reset, validate_separately, **check_params)

552 y = check_array(y, input_name="y", **check_y_params)

553 else:

--> 554 X, y = check_X_y(X, y, **check_params)

555 out = X, y

557 if not no_val_X and check_params.get("ensure_2d", True):

File ~\.virtualenvs\jupyter-books-WZpnkDri\Lib\site-packages\sklearn\utils\validation.py:1104, in check_X_y(X, y, accept_sparse, accept_large_sparse, dtype, order, copy, force_all_finite, ensure_2d, allow_nd, multi_output, ensure_min_samples, ensure_min_features, y_numeric, estimator)

1099 estimator_name = _check_estimator_name(estimator)

1100 raise ValueError(

1101 f"{estimator_name} requires y to be passed, but the target y is None"

1102 )

-> 1104 X = check_array(

1105 X,

1106 accept_sparse=accept_sparse,

1107 accept_large_sparse=accept_large_sparse,

1108 dtype=dtype,

1109 order=order,

1110 copy=copy,

1111 force_all_finite=force_all_finite,

1112 ensure_2d=ensure_2d,

1113 allow_nd=allow_nd,

1114 ensure_min_samples=ensure_min_samples,

1115 ensure_min_features=ensure_min_features,

1116 estimator=estimator,

1117 input_name="X",

1118 )

1120 y = _check_y(y, multi_output=multi_output, y_numeric=y_numeric, estimator=estimator)

1122 check_consistent_length(X, y)

File ~\.virtualenvs\jupyter-books-WZpnkDri\Lib\site-packages\sklearn\utils\validation.py:877, in check_array(array, accept_sparse, accept_large_sparse, dtype, order, copy, force_all_finite, ensure_2d, allow_nd, ensure_min_samples, ensure_min_features, estimator, input_name)

875 array = xp.astype(array, dtype, copy=False)

876 else:

--> 877 array = _asarray_with_order(array, order=order, dtype=dtype, xp=xp)

878 except ComplexWarning as complex_warning:

879 raise ValueError(

880 "Complex data not supported\n{}\n".format(array)

881 ) from complex_warning

File ~\.virtualenvs\jupyter-books-WZpnkDri\Lib\site-packages\sklearn\utils\_array_api.py:185, in _asarray_with_order(array, dtype, order, copy, xp)

182 xp, _ = get_namespace(array)

183 if xp.__name__ in {"numpy", "numpy.array_api"}:

184 # Use NumPy API to support order

--> 185 array = numpy.asarray(array, order=order, dtype=dtype)

186 return xp.asarray(array, copy=copy)

187 else:

File ~\.virtualenvs\jupyter-books-WZpnkDri\Lib\site-packages\pandas\core\generic.py:2070, in NDFrame.__array__(self, dtype)

2069 def __array__(self, dtype: npt.DTypeLike | None = None) -> np.ndarray:

-> 2070 return np.asarray(self._values, dtype=dtype)

ValueError: could not convert string to float: 'Iris-virginica'

Error? Wait what happened!?! This error returns a lot of output, but the last line makes things clear:

ValueError: could not convert string to float: 'Iris-virginica'

The algorithm expected numbers; it does not know what to do with the flower types (strings). So how do we fix this?

Processing Categorical Data (the easy way)#

One way to fix a problem is to avoid it. You are not required to use all the data -only some of it. Sometimes choosing the right variables is the real trick. Dimensionality reduction is an important part of the data sciences. Here, those flower types DO matter, and it would be best to include that data -but goal #1 is to get things working. Improving things is step #2 and step #3 and step #4 and … step \(\# \infty\).

So just to get things rolling, let’s remove the column with the categorical data:

X_train_no_type = X_train.drop(columns = ['type'])

X_test_no_type = X_test.drop(columns = ['type'])

X_test_no_type

| sepal-width | petal-length | petal-width | |

|---|---|---|---|

| 119 | 2.2 | 5.0 | 1.5 |

| 128 | 2.8 | 5.6 | 2.1 |

| 135 | 3.0 | 6.1 | 2.3 |

| 91 | 3.0 | 4.6 | 1.4 |

| 112 | 3.0 | 5.5 | 2.1 |

| 71 | 2.8 | 4.0 | 1.3 |

| 123 | 2.7 | 4.9 | 1.8 |

| 85 | 3.4 | 4.5 | 1.6 |

| 147 | 3.0 | 5.2 | 2.0 |

| 143 | 3.2 | 5.9 | 2.3 |

| 127 | 3.0 | 4.9 | 1.8 |

| 39 | 3.4 | 1.5 | 0.2 |

| 38 | 3.0 | 1.3 | 0.2 |

| 93 | 2.3 | 3.3 | 1.0 |

| 23 | 3.3 | 1.7 | 0.5 |

| 133 | 2.8 | 5.1 | 1.5 |

| 30 | 3.1 | 1.6 | 0.2 |

| 83 | 2.7 | 5.1 | 1.6 |

| 37 | 3.1 | 1.5 | 0.1 |

| 41 | 2.3 | 1.3 | 0.3 |

| 81 | 2.4 | 3.7 | 1.0 |

| 120 | 3.2 | 5.7 | 2.3 |

| 43 | 3.5 | 1.6 | 0.6 |

| 2 | 3.2 | 1.3 | 0.2 |

| 64 | 2.9 | 3.6 | 1.3 |

| 62 | 2.2 | 4.0 | 1.0 |

| 56 | 3.3 | 4.7 | 1.6 |

| 67 | 2.7 | 4.1 | 1.0 |

| 49 | 3.3 | 1.4 | 0.2 |

| 63 | 2.9 | 4.7 | 1.4 |

| 79 | 2.6 | 3.5 | 1.0 |

| 54 | 2.8 | 4.6 | 1.5 |

| 106 | 2.5 | 4.5 | 1.7 |

| 90 | 2.6 | 4.4 | 1.2 |

| 145 | 3.0 | 5.2 | 2.3 |

| 14 | 4.0 | 1.2 | 0.2 |

| 141 | 3.1 | 5.1 | 2.3 |

| 51 | 3.2 | 4.5 | 1.5 |

| 139 | 3.1 | 5.4 | 2.1 |

| 70 | 3.2 | 4.8 | 1.8 |

| 97 | 2.9 | 4.3 | 1.3 |

| 55 | 2.8 | 4.5 | 1.3 |

| 32 | 4.1 | 1.5 | 0.1 |

| 104 | 3.0 | 5.8 | 2.2 |

| 136 | 3.4 | 5.6 | 2.4 |

| 18 | 3.8 | 1.7 | 0.3 |

| 108 | 2.5 | 5.8 | 1.8 |

| 98 | 2.5 | 3.0 | 1.1 |

| 45 | 3.0 | 1.4 | 0.3 |

| 68 | 2.2 | 4.5 | 1.5 |

Now the models will train without error:

linear_reg_model.fit(X_train_no_type, y_train)

LinearRegression()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

LinearRegression()

And the model can make predictions for an entire set:

y_pred_no_type = linear_reg_model.predict(X_test_no_type)

y_pred_no_type

array([[5.98770812],

[6.42220879],

[6.81026327],

[6.3038615 ],

[6.48153317],

[5.75587752],

[6.02106191],

[6.34587216],

[6.31716812],

[6.788514 ],

[6.23165894],

[5.01764515],

[4.57470184],

[5.07393461],

[4.87302581],

[6.48997581],

[4.88812177],

[6.34092093],

[4.885904 ],

[4.00445291],

[5.46842817],

[6.62636673],

[4.85349432],

[4.71509986],

[5.50178197],

[5.57125107],

[6.43782043],

[6.00331976],

[4.86637251],

[6.31473613],

[5.44667891],

[6.08460761],

[5.63522521],

[6.01862993],

[6.08060051],

[5.19561829],

[6.06972588],

[6.28433001],

[6.47065854],

[6.29098332],

[6.06929744],

[6.16124571],

[5.58789409],

[6.64589822],

[6.60683523],

[5.3817326 ],

[6.61032665],

[4.89225584],

[4.57691961],

[5.58233992]])

Or a single input:

# The model was trained with a dataframe, so you can only predict on dataframes

# Recall we removed the petal type, and we are predicting the sepal-length

column_names_short = ['sepal-width', 'petal-length', 'petal-width']

# Creates a dataframe from a single element for input. This avoids a warning for missing feature names.

#Alternatively, you can use print(linear_reg_model.predict([[3.2, 1.3, .2]])) without error.

input_df = pd.DataFrame(np.array([[3.2, 1.3, .2]]), columns = column_names_short)

print(linear_reg_model.predict(input_df))

[[4.71509986]]

Note

Your model can only predict on data simliar to what it was trained with. Since this model was trained with a dataframe, a matching new dataframe, ‘input_df’ was created to predict a single input. Alternatively, we could have converted the original data to an array using ravel() or .values (see the previous example).

Accuracy Analysis (for Regression) part 1#

Now that the model works, we can work on improving it. But first we’ll need metrics so we can tell if we’re making progress. As we are trying to predict a continuous number, even the very best model will have errors in almost every prediction (if not, it’s almost certainly overfitted). Whereas measuring the success of our classification model was a simple ratio, here we need a way to measure how much those predictions deviate from actual values. See sklearn’s list of metrics and scoring for regression.

To get things started, we’ll use the mean squared error (MSE), a popular metric for evaluating regression models. Regression metrics are covered in more depth in the [Regression Accuracy Analysis](sup_reg_ex: develop: accuracy) section.

from sklearn.metrics import mean_squared_error

mean_squared_error(y_test, y_pred_no_type)

0.12264182555541722

What does this mean? The closer the MSE is to 0, the better. However, this value is not given in terms of the dependent variable, and MSE values are not comparable across use cases, i.e., comparing an MSE from your project to that of a different model is not comparing “apples to apples.” A “good” MSE will depend on your data and project needs.

This value can be used to determine if tweaks improve the results. For example, reviewing the visualizations of this dataset, we might expect that the regression coefficients (numbers determining the lines directions) should be positive, and try LinearRegression(positive = True).

linear_reg_model2 = LinearRegression(positive = True)

linear_reg_model2.fit(X_train_no_type, y_train)

y_pred_no_type = linear_reg_model2.predict(X_test_no_type)

mean_squared_error(y_test, y_pred_no_type)

0.10302108975537984

And \(0.103 < 0.122\).